It's been a month since I last wrote our work on ChatCraft.org, the developer focused AI open source project that I've been working on with Taras. In that time, we've been able to iterate on the UX, work with users to add new features, and implement a number of the ideas I described in my last post. It's coming along really well, so I thought I'd take a few minutes to show you what we have right now.

As I've worked on the code, and used it myself, the definition of what we're building has evolved. At its most basic level, ChatCraft.org is a personal, web-based tool for discussing code and software development ideas with large language models. "So it's ChatGPT, right?" Sort of, but increasingly less so. ChatCraft is really a tool for iteratively writing and thinking about code with LLMs.

As an open source software developer and educator, I spend the majority of my day in the GitHub UI: writing feedback, reviewing code, reading and adding comments to issues and PRs, and searching. The flows and conventions of reading and writing on GitHub are now built into how I want to work: linkable, editable Markdown everywhere. As such, I've had a strong desire to find a way to replicate my approach to talking to developers with my LLM conversations. These are obviously completely different activities, both in aim and outcome; but they share an important idea. In both cases, I'm thinking through writing.

Much as I'm doing now in this post, I write to understand and solidify my thinking. When I'm blogging, I'm trying to communicate what I'm experiencing, first to myself, but also to others. Similarly, when I'm working on GitHub, I'm at once attempting to develop both a line of thought as well as a line of commits.

Taras laughs at me for doing everything in pull requests vs. simply landing small things on main. However, I find that I need the extra layer of explanation and writing to wrap around my code. Just as I'm hoping to cleanly merge my branch with yours, I also need to find a way to turn what I'm thinking into something that can be integrated with what you are thinking. I do this through writing, sometimes in code, more often in prose.

Because I write so much on GitHub, I've come to appreciate its affordances. I think this comfort and familiarity with its UX has influenced how I wanted to see ChatCraft evolve. Let me show you some of what I mean.

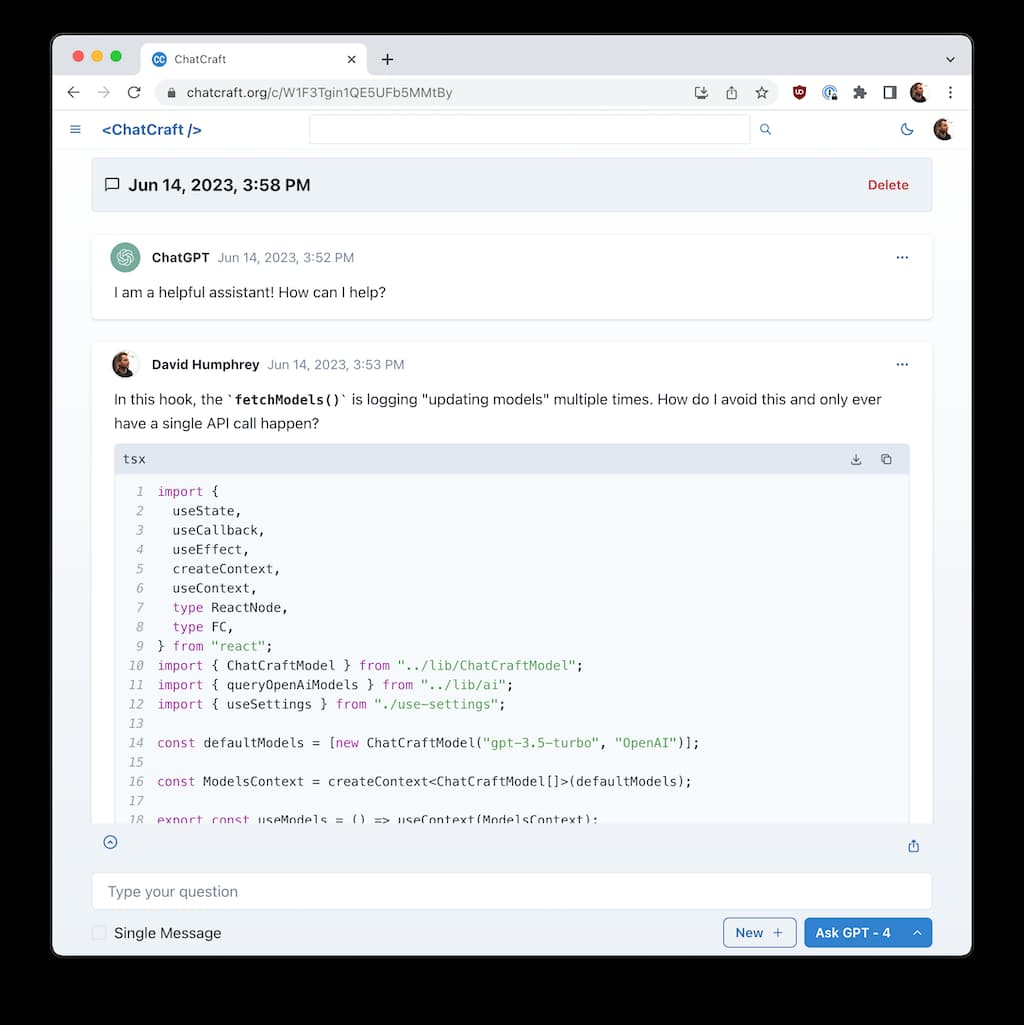

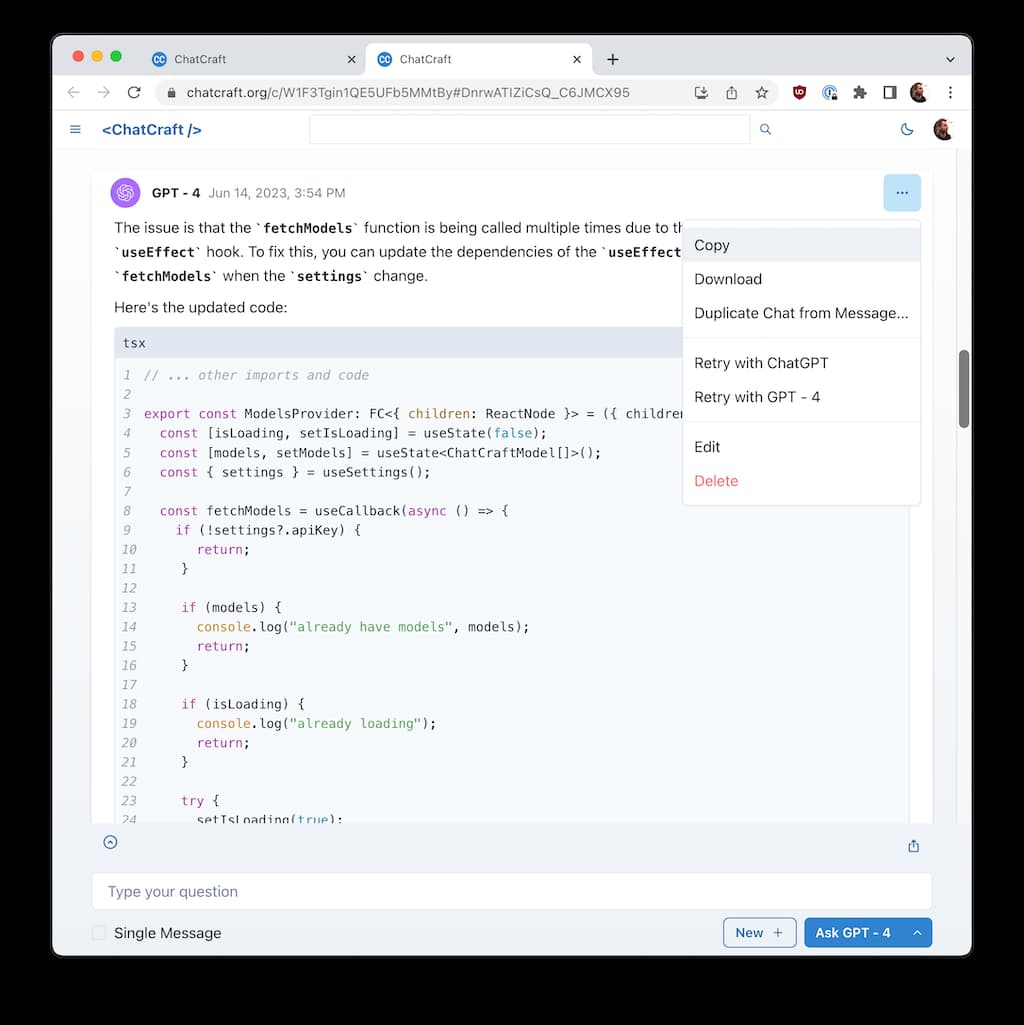

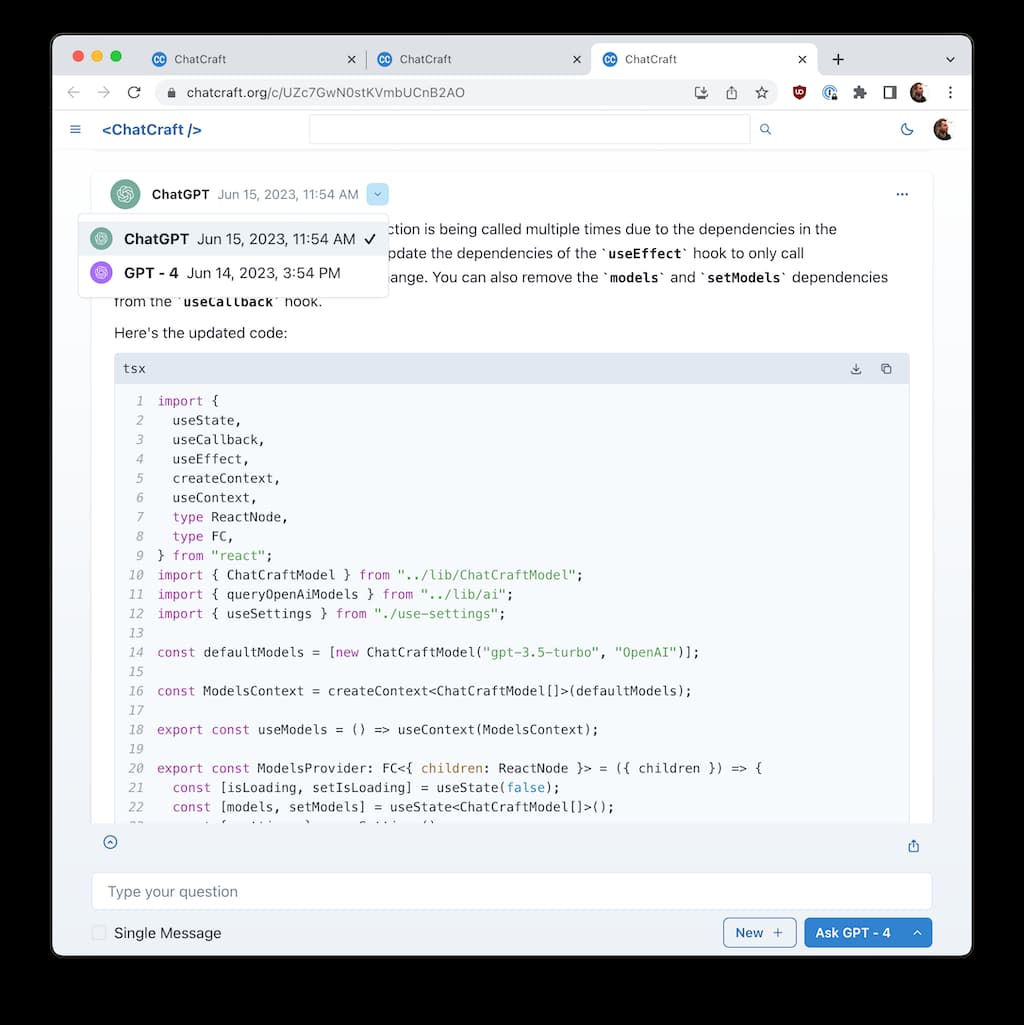

Here's the start of my most recent chat with ChatCraft, in which I'm fighting with a re-rendering bug in a hook within ChatCraft:

All chats have their own URL and get stored in a local database. This chat is https://chatcraft.org/c/W1F3Tgin1QE5UFb5MMtBy. You won't be able to open that link and get my data because it's stored in IndexedDB in my browser. We're using Dexie.js to interact with the database, and it's been fabulous to use with React. Taras has dreams of using SQLite with WASM in a Worker down the road, but I wanted to get something working now.

Like Chats, each Message also has its own link, allowing me to deep-link to anything in my history. The first message above by me is reachable via https://chatcraft.org/c/W1F3Tgin1QE5UFb5MMtBy#DnrwATIZiCsQ_C6JMCX95 by clicking on the date Jun 14, 2023, 3:53 PM

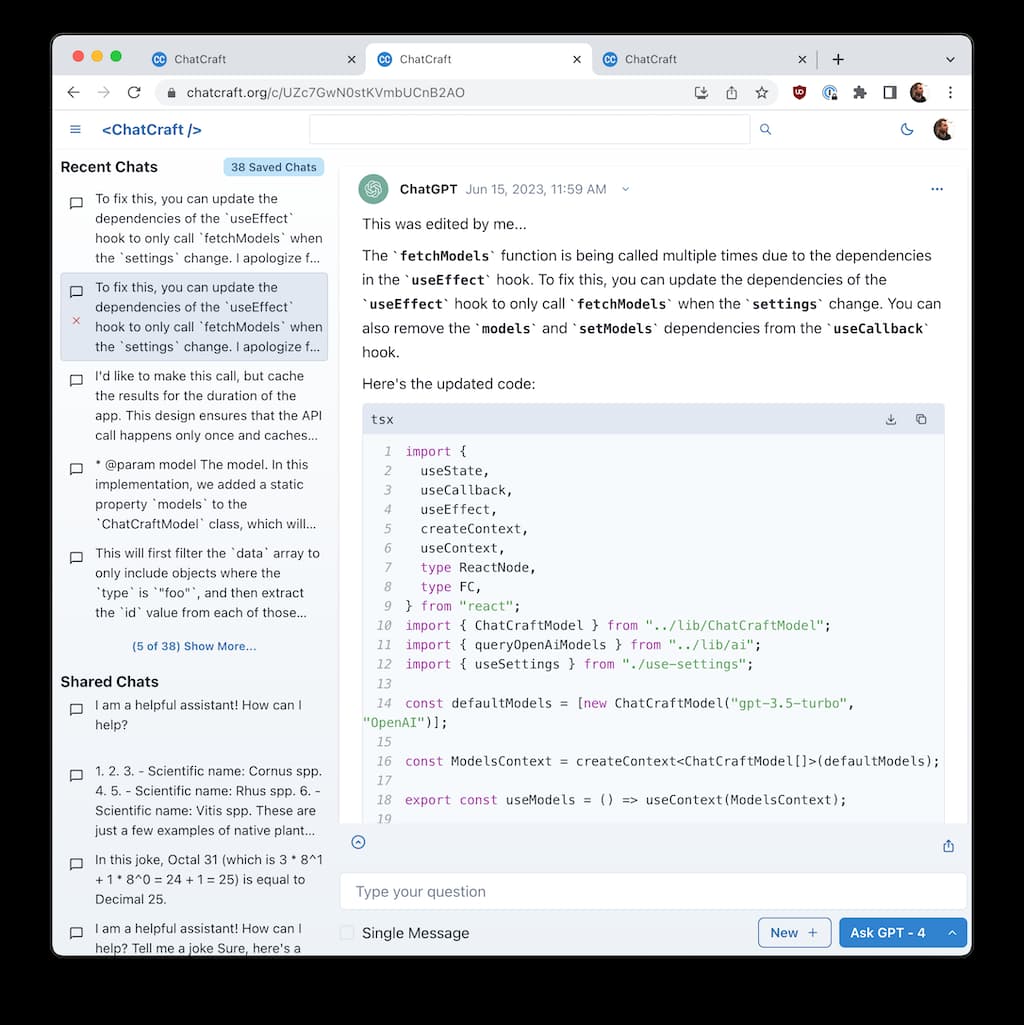

I can also open the sidebar (via the hamburger menu), revealing previous and shared chats (more on that below), making it easy to go back to something I was working on before:

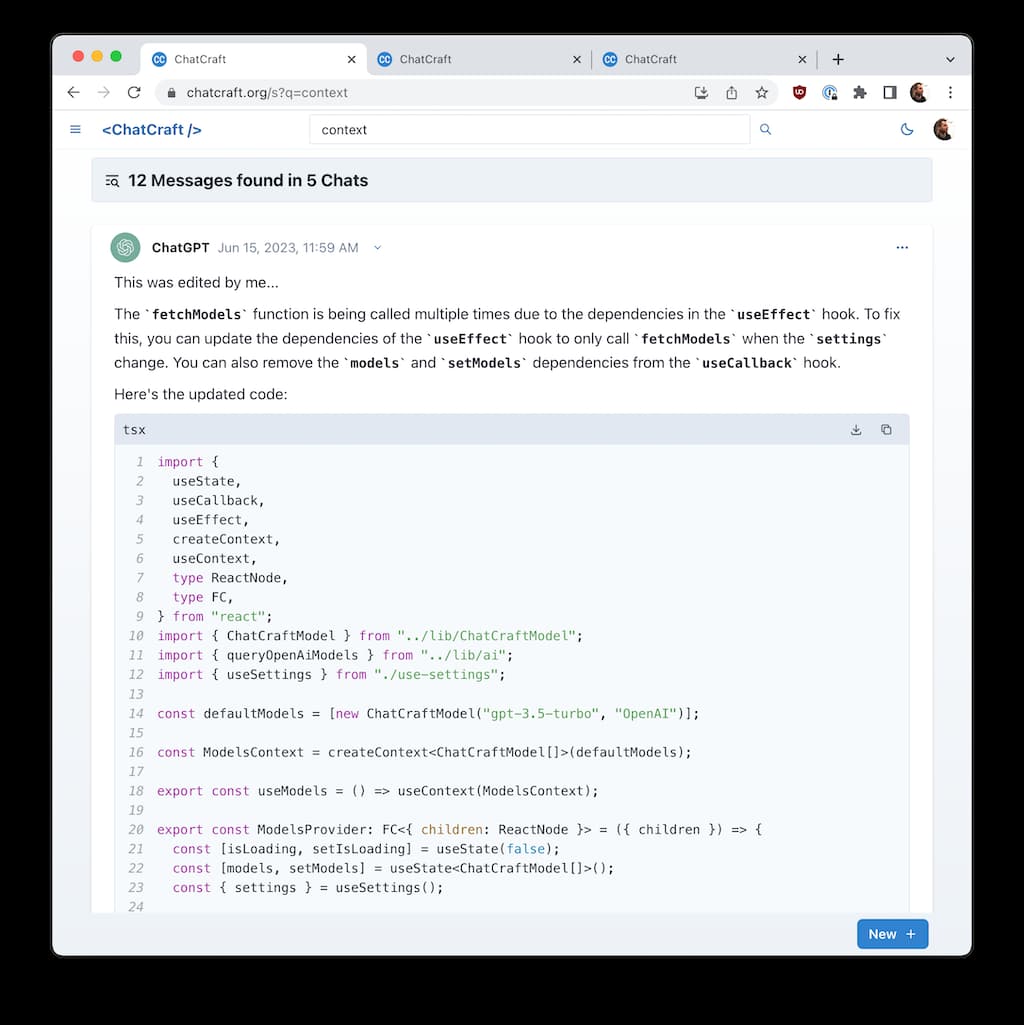

If I instead want to search for something, all of my old chats and messages are indexed. Here I'm searching for context, which returns a bunch of messages in various chats. Clicking on any of their links (i.e., dates) will take me to the chat itself:

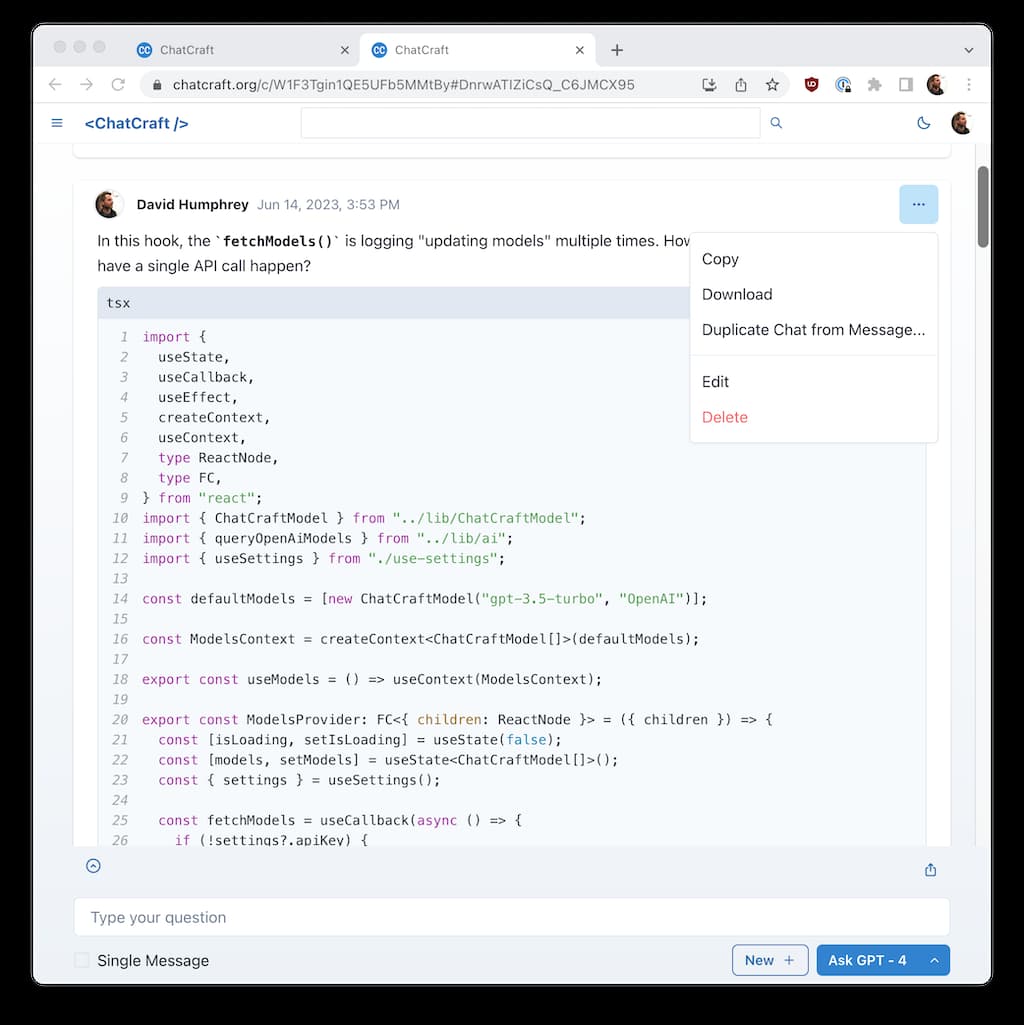

Every message supports various actions via a "dots" menu on the right. For example, here are examples of 1) a message written by me; followed by 2) a message by an LLM:

In both cases, I can Copy, Download, Duplicate, Edit, and Delete. Most of these are self-explanatory, but I'll call out Duplicate and Edit.

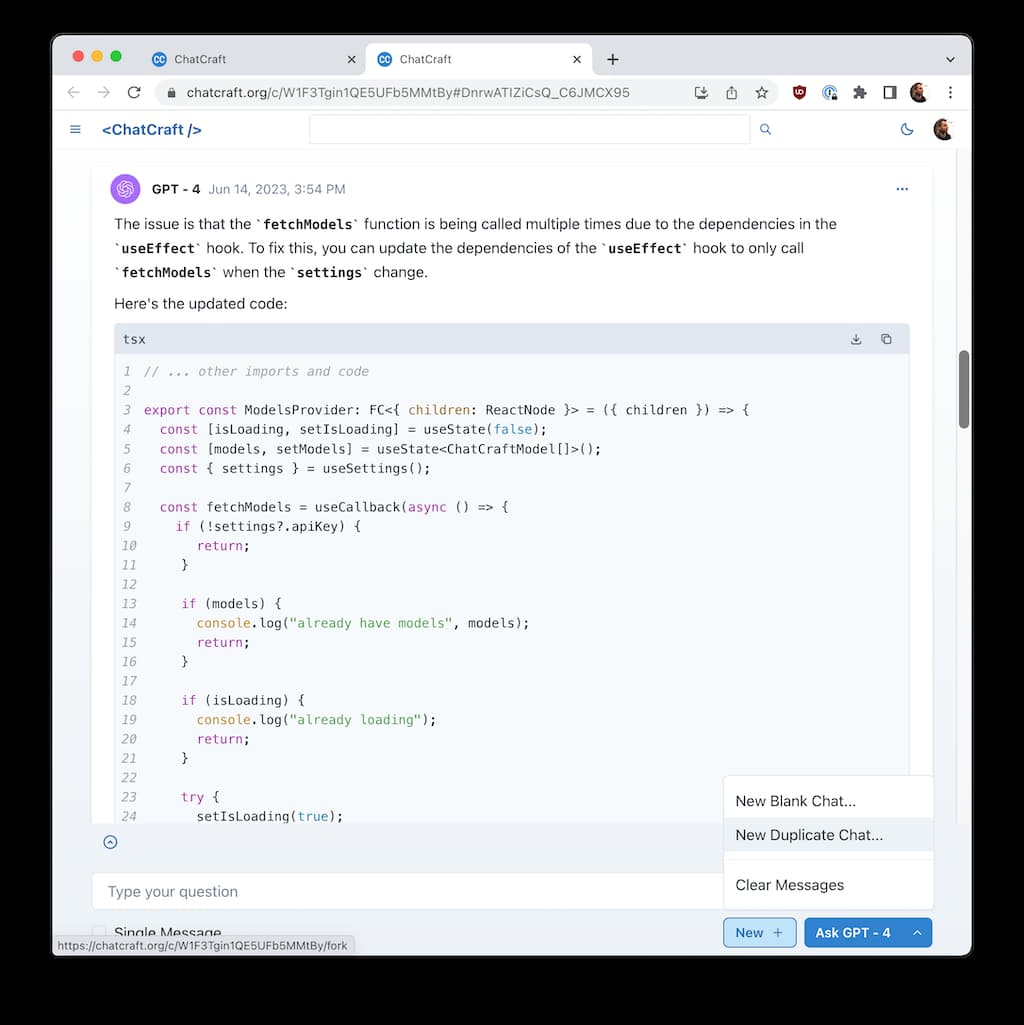

We had users asking to be able to "fork" a message, thereby taking a conversation in multiple directions at once. We let you do that at the level (i.e., create a new chat in the DB with its own URL, but copy all the messages) or from a particular message in a chat (i.e., use this message as my end point and go back vs. the whole thing).

This is really useful when you want to experiment with different paths through a conversation, trying alternate prompts without erasing your current work. Because each chat has its own URL, you can work in multiple tabs at the same time.

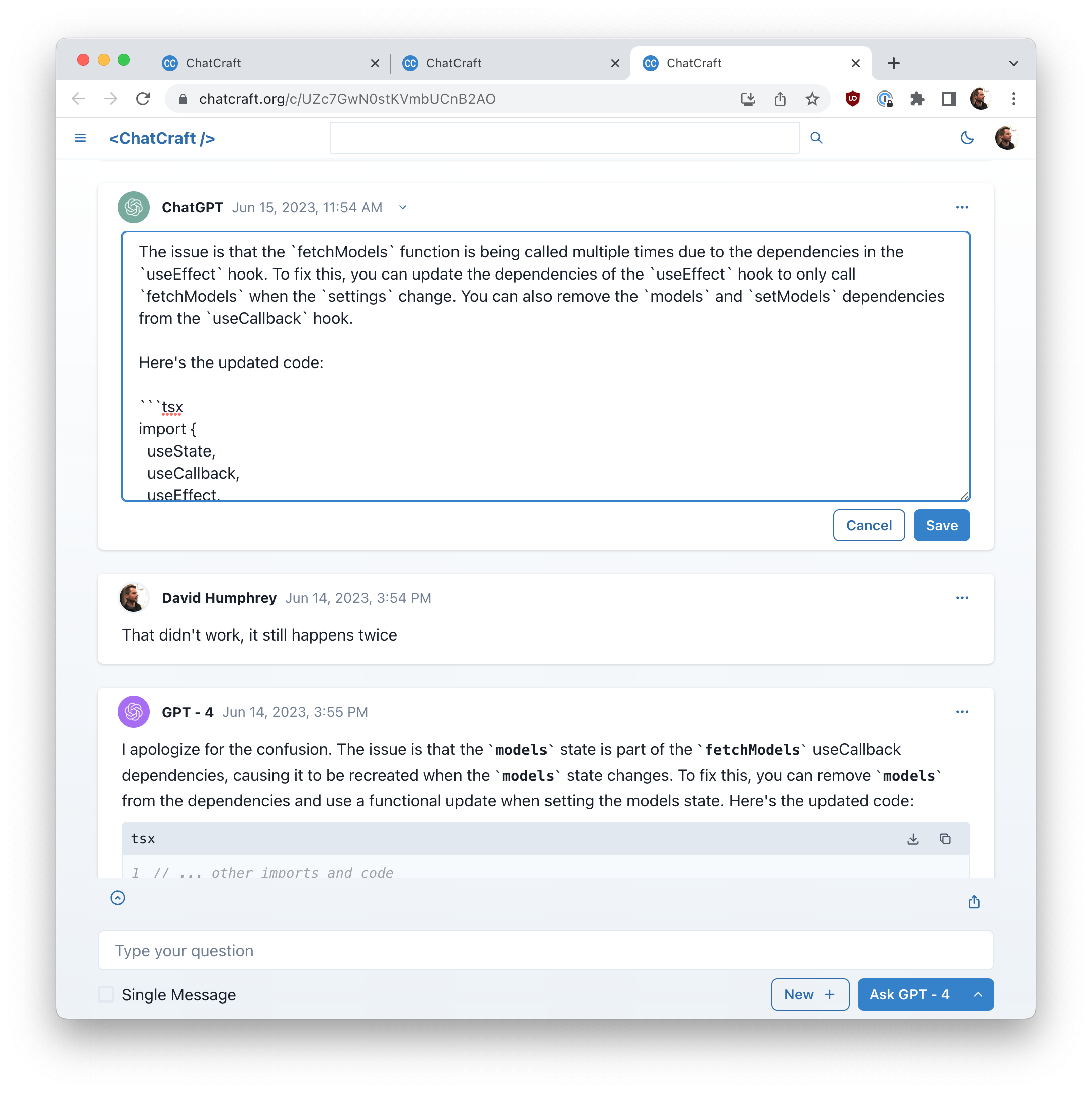

The Edit feature is also both obvious and amazing. I've wanted it for a long time. Using it, I can put myself in the driver seat for all interactions. Rather than passively reading and accepting an LLM's response, I can (and do!) edit it to reflect the way I want things to move. Having the ability to edit and delete text anywhere in a chat gives incredible freedom to experiment and explore. I'm no longer beholden to AI hallucinations, my own typos, or Markdown formatting issues. Also, I'm not locked into a past version of how things went in a chat. I can always rework anything to fit new directions. Remember that a chat is really a context for the messages that will follow, so it's helpful to be able to alter your current context to meet new expectations as the chat unfolds.

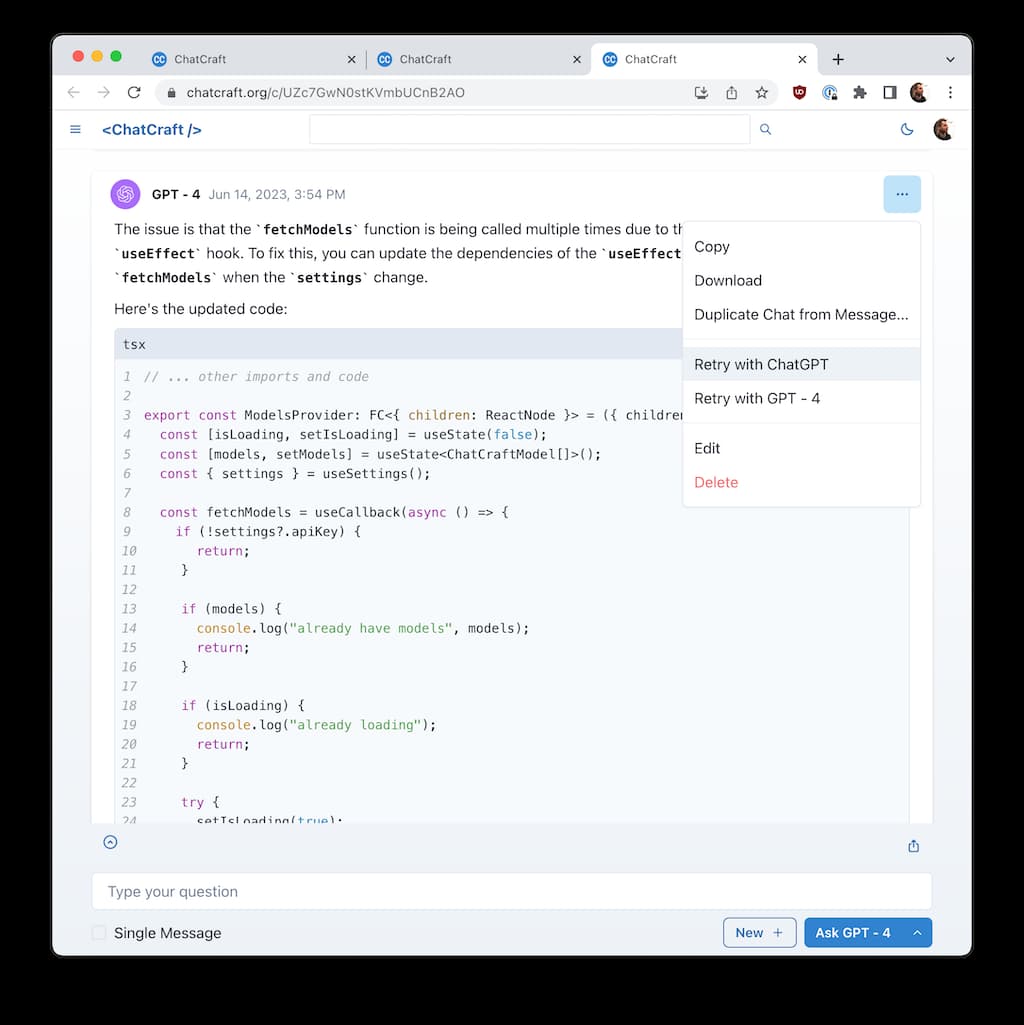

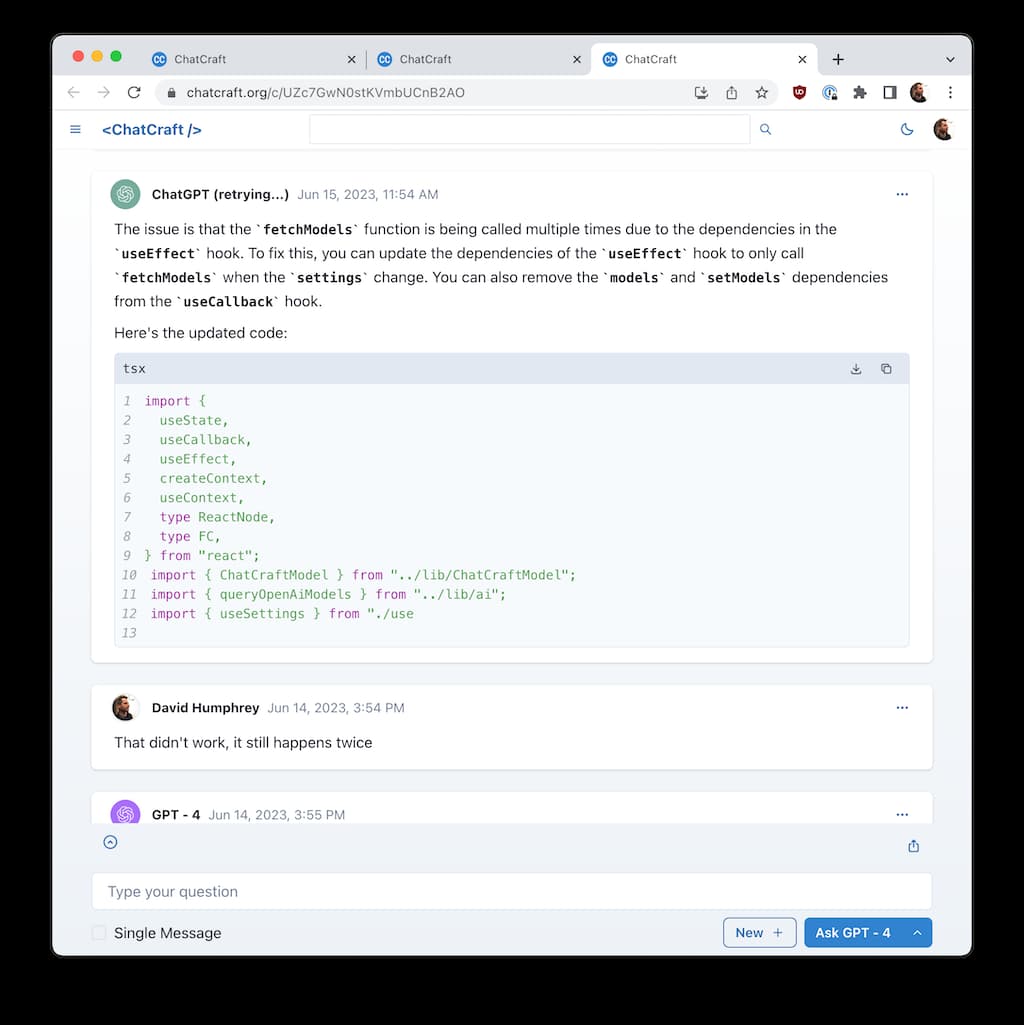

Extending this idea even further, another feature we've recently shipped is the ability to Retry an AI message with a different language model. Imagine you're 5 messages deep into a conversation with GPT-3.5 and you wonder what would GPT-4 might have said in response to the third message. Or maybe you'd like to mix GPT-3.5 with GPT-4 in the same conversation, or compare how they work. It's really simple to do:

Here I've taken a response from GPT-4 and retried it with ChatGPT. The old response is saved as a version along side the new one, and the new response is streamed into the current message. I can easily switch back and forth between the two, making it easy to compare (I'm amazed how little they differ most of the time). I can also edit one of the responses and create a third version:

The flexibility to mix and match LLMs, edit and retry prompts, delete messages that don't make sense, duplicate some or all of a message and keep going in new directions–all of this has made writing about code with LLMs incredible productive for me.

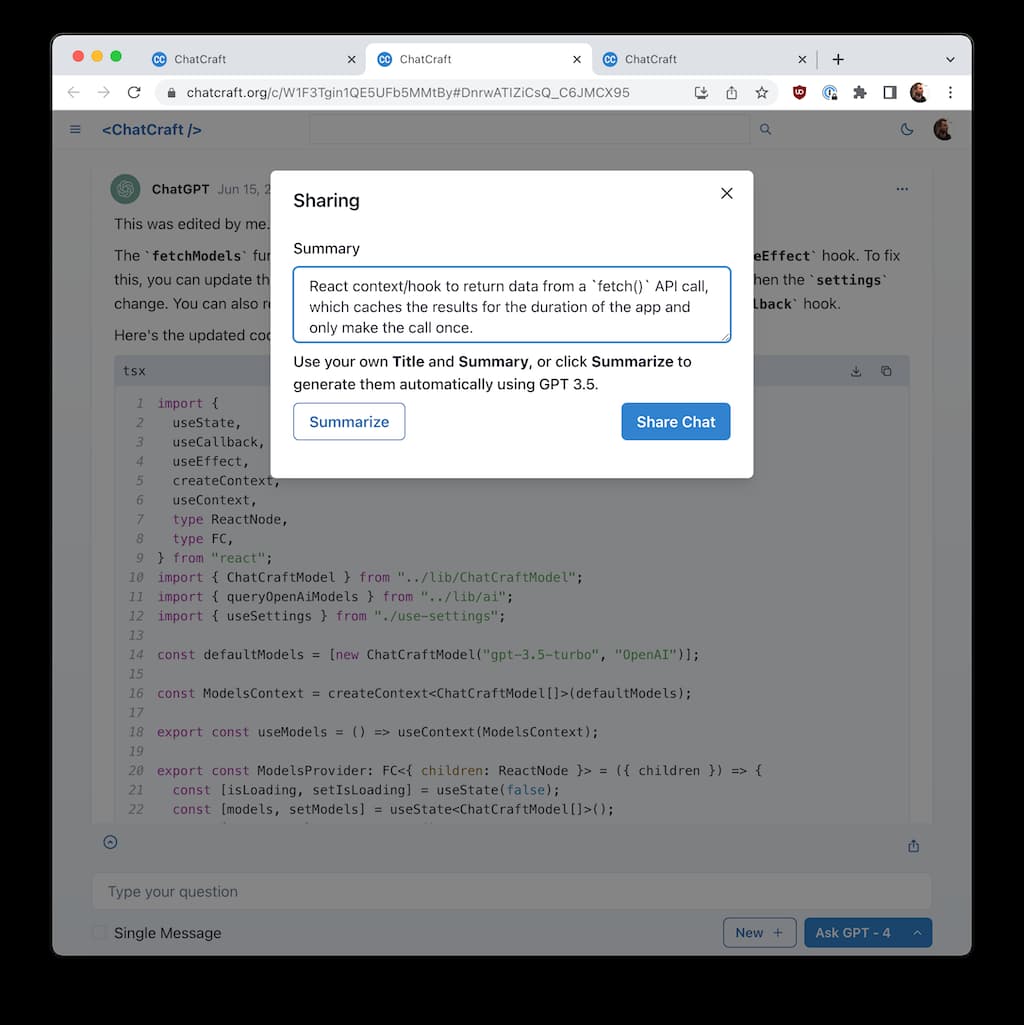

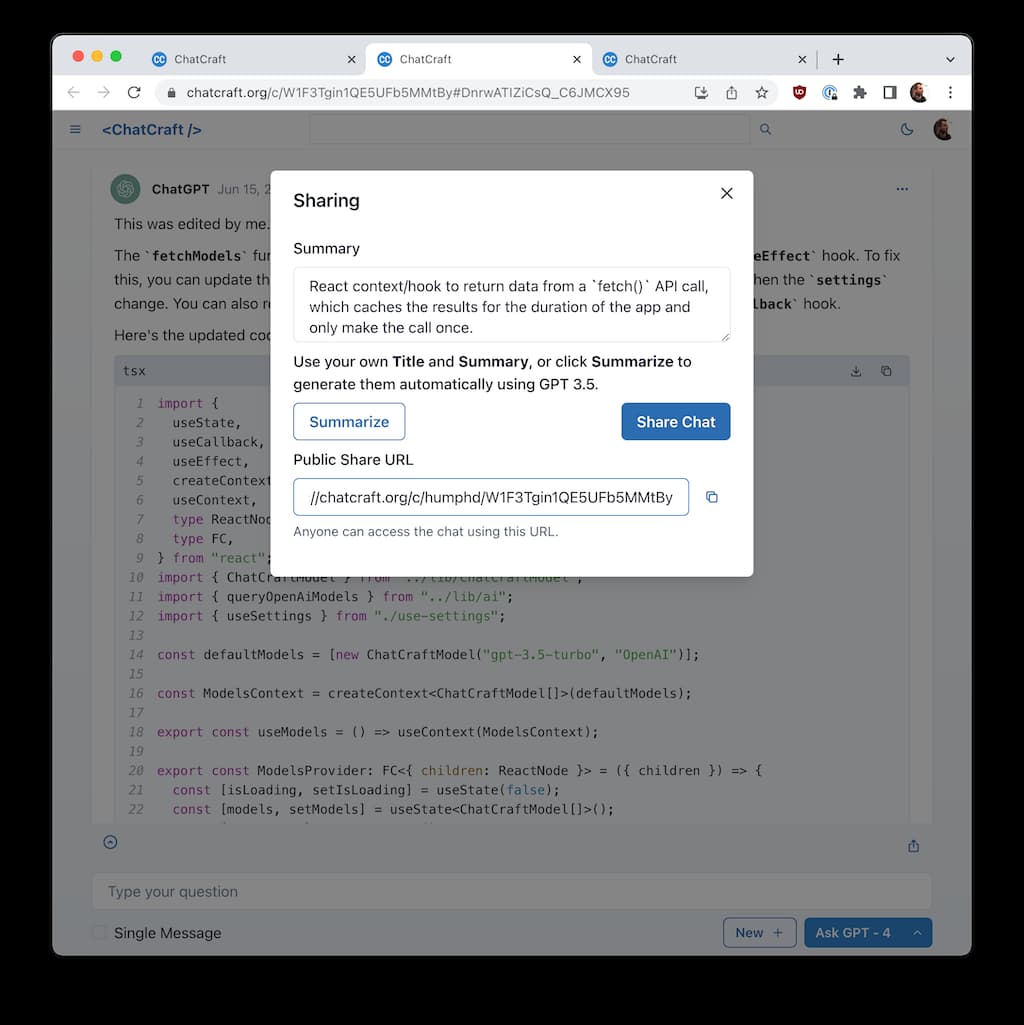

Taras has also been really bullish about getting sharing to work. We've built an initial version on top of CloudFlare Pages and Functions, with GitHub OAuth for authorization and R2 as our object store. I've wanted to learn CloudFlare's serverless tools for a while in order to compare with what I know from AWS. I have been impressed so far, and filed issues on things that have been harder than they need to be.

Here's what sharing looks like:

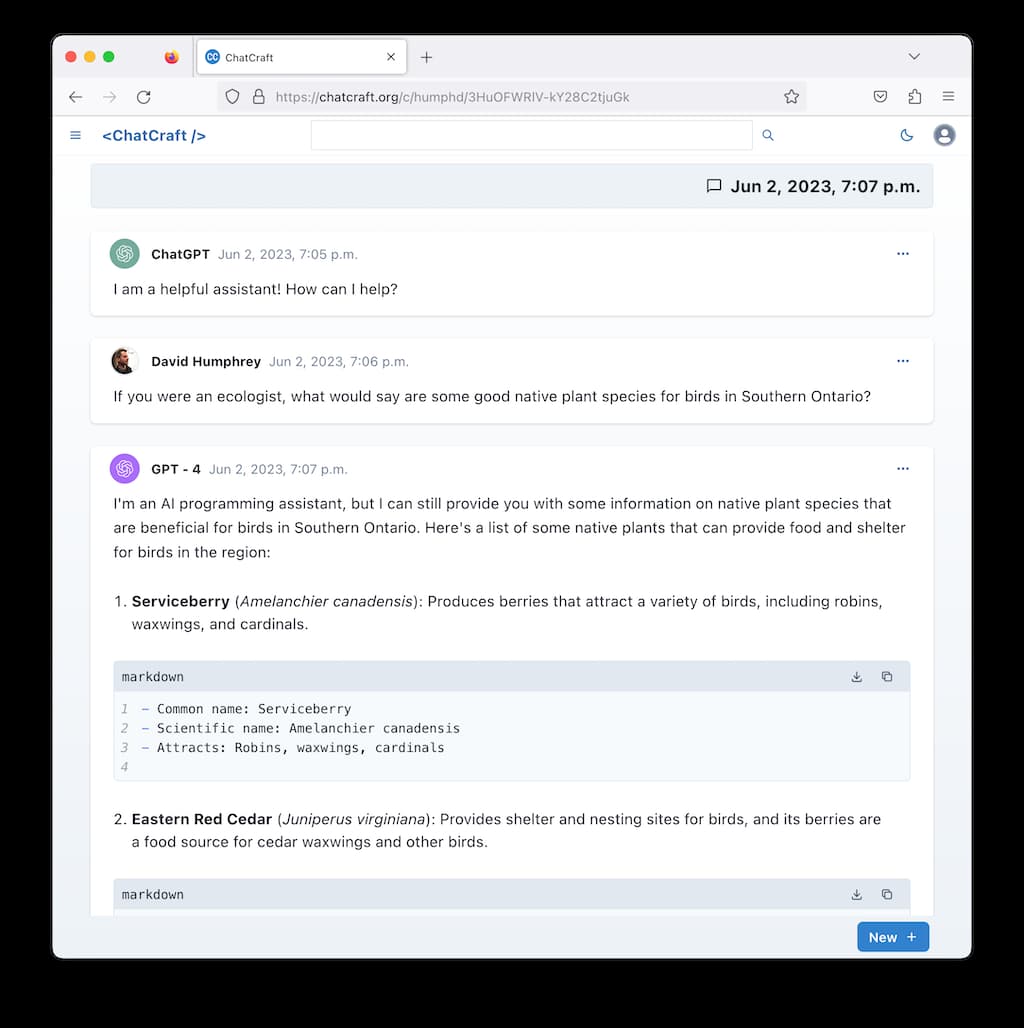

After authenticating with GitHub, I can manually or automatically summarize my chat and click Share Chat. This creates a public URL that I can share with friends (NOTE: As I write this I notice that we've broken something with loading shared chats, which I've filed to fix later. You can try this one, which still works: https://chatcraft.org/c/humphd/3HuOFWRlV-kY28C2tjuGk):

When I open a shared URL in a browser where I'm not logged in, I can read and duplicate the chat, whereas if I was logged in I could also edit:

I'm excited to extend and fix bugs in what we have so far. Taras has a PR up to add dynamic model support, which will make it easier to try out more models. We're also hoping to add models from vendors other than OpenAI. I'm looking forward to being able to have a more "social" experience by mixing different LLMs in the same chat, which is easy now with the features I just outlined. We're also interested to explore adding tools and functions to the mix.

I don't think I'm alone in my belief that writing, and tools that support good writing, are the keys to unlocking LLMs. This week I had a tweet about people reflex-accepting Copilot suggestions do close to a million views (lots of people seem to recognize what I was concerned with). I think AI has tremendous power to support software developers, but as an educator that spends so much time with the next generation trying to learn our craft, I'm not convinced that we're getting this right in all cases.

With ChatCraft, I'm hoping to do the opposite. I want to read, write, edit, review, and compare text with LLMs. I'm not interested in having text dropped into my editor, word processor, or other tools as-is. I'm looking for, and trying to build, tools for thought.

I suspect that ChatCraft will look different again in another few months. Maybe that will be because you've gotten involved and helped us do something cool. In the meantime, I'm having fun in my spare time exploring what I think AI and coding can do.