Yesterday we shipped Processing.js 1.3.5. This release was mostly devoid of new code, and instead focused on fixing various issues that came-up in our 1.3.0 release. By the time we finished fixing the "few" things we'd found wrong with 1.3.0, we had about 50 bugs filed and fixed.

To actually ship a version of Processing.js takes many days. We have automated build and release systems, so it's not the actual release that takes time--it's the final testing. Before we land any code change or fix, we run ~5,000 automated tests which test functional correctness (unit tests), the parser (Processing to JavaScript), visual reference tests (does code A still draw the same way it used to), and performance tests (did this code get slower?). We have every change go through two levels of review, which means that it usually gets tested on multiple platforms and half-a-dozen browsers. In short, we typically have a pretty high degree of confidence for any change that goes into our tree. This happens for every patch, and then before we ship, we have a set of manual tests we run in Windows, Linux, Mac, Android, iOS and using Chrome, Safari, Firefox, IE9, Opera, and various mobile browsers. We almost always find issues when we do our final testing (this time we found a bad PImage cache bug we'd introduced). When we're done, we know that our code is in pretty good shape.

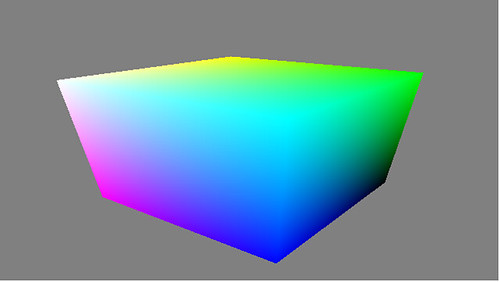

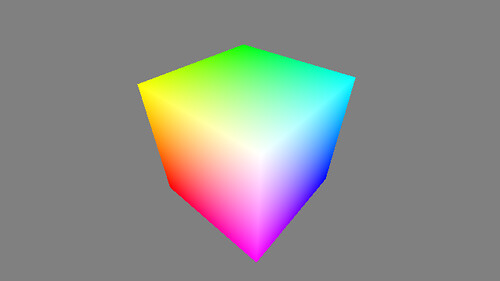

And despite all this we still get it wrong sometimes. After shipping 1.3.5, and feeling pretty good about our work, Casey filed a new bug, while 1.3.5 was only 30 minutes old. Here's what he was seeing, followed by what it looked like with 1.3.0 (notice the skew):

Jon and I used git to bisect and find the offending commit, and quickly found the culprit: our camera() code was exposing a call-order bug, where sizes aren't being setup properly in two different variables.

What's most interesting about this bug for me is that it would have been impossible for our current tests to ever catch this bug. Our visual ref tests are currently always 100 x 100 pixels in size, and this bug is only triggered because the canvas is not the same width and height.

After we fix it, and in order to write a proper regression test, we'll have to do something different than we usually do. The test will be easy to write, and we won't ever re-introduce this bug again. But it took an edge case, and a user filing a bug for us to get here.

You can write all the tests you want, and you can run them faithfully, but the absence of test failures will never be the same as the absence of bugs. It highlights why you need a multi-pronged approach to fixing things: active users who push your code to the limit and tell you when it fails, a body of tests that protects you from your past mistakes, a set of processes that force you to do extra checks even when you don't feel like it, and finally, humility to accept that your code will always need improvements and will always contain bugs.

I learned long ago, as a Mozilla developer, that all code sucks, all code hides bugs, and there's nothing you can do about it. It's actually very liberating once you learn this lesson, since it allows you to recover more quickly from failed patches, accept criticism in reviews, and never judge other programmers as though you aren't also subject to the same laws of programming.

Anyway, Processing 1.3.6 is coming soon, featuring less skew and with more regression tests :)