There are many milestones as a father, from watching your children learn to walk and talk, to learning to ride a bike and drive a car. Sometimes you're lucky enough to be involved in these experiences and get to share in the joy of discovery and feelings of success. This week was like that for me. I got the chance to help my youngest daughter release her first open source project.

Automated Camera Trapping Identification and Organization Network (ACTION) is a command-line tool that uses AI models to automate camera trap video analysis. It works on both terrestrial (mammals, etc.) and aquatic (fish) camera footage. Typically a project will have hundreds or thousands of videos, and manually scrubbing through them to find positive detections is incredibly time consuming and error-prone.

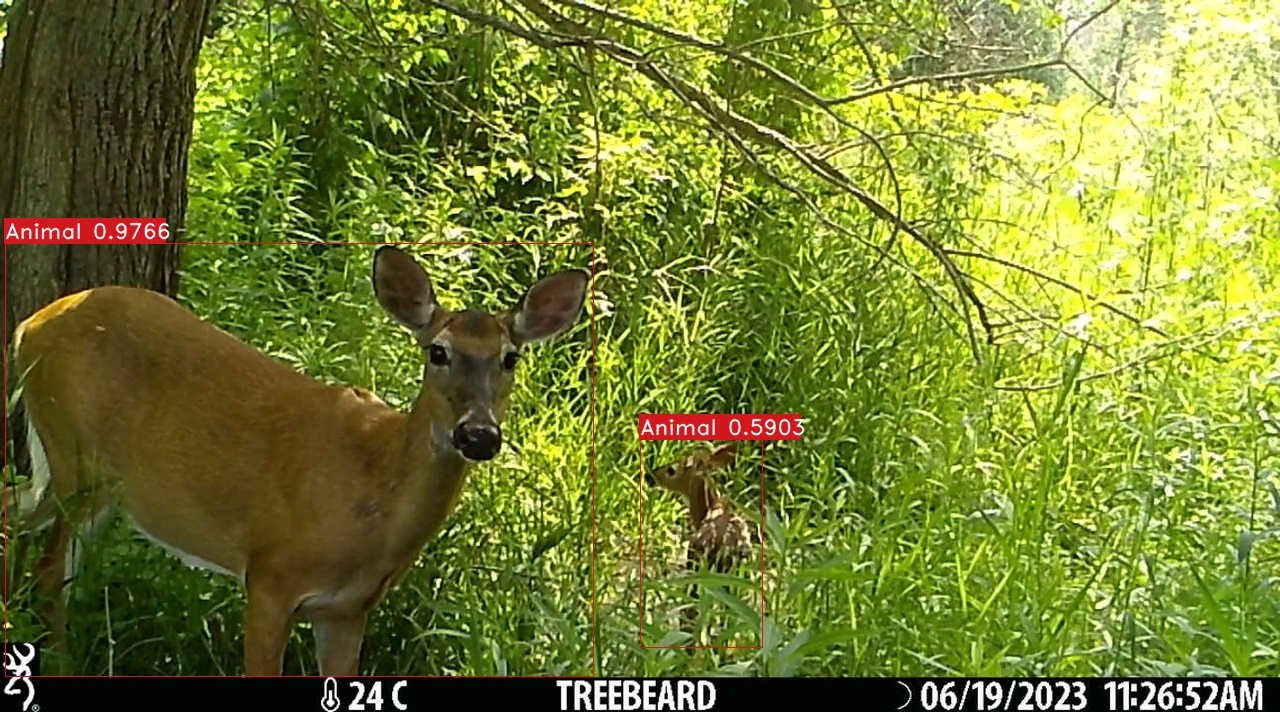

Using ACTION, one or more videos can be analyzed with AI models specially trained to detect animals in camera trap footage or fish in underwater video. It uses the open source YOLO-Fish and MegaDetector neural networks to build a video analysis pipeline. The models are able to detect an "animal" or "fish" with a given confidence score in an image:

However, as powerful as these AI models are, they aren't programs you can run on their own to accomplish real-world tasks. I don't think people realize this, since it seems like everything has "AI" in it these days. But the reality is quite different: you can use powerful AI models to do super-human things, but they require you to build custom pipelines around them to extract video frames, resize images, process detections, draw bounding boxes, process clips, create new videos, and allow a user to customize many settings. Then you have to package it up in a way that others can use without downloading and installing half the internet. The process turns out to be quite involved, and, dare I say, worthy of its own open source project!

Like all useful tools, ACTION was born out of genuine need. My daughter, who is studying biodiversity at university, is currently completing an internship with a local conservation authority that is studying the endangered Redside Dace in their aquatic ecosystems.

Specifically, their researchers have been comparing the effectiveness of different conservation monitoring technologies, from electrofishing to eDNA (environmental DNA) to "aquatic camera trapping." The project seemed like a great fit, since my daughter is an expert on camera trapping, with years of existing field experience. However, applying the same ideas to underwater cameras was a totally new challenge.

Typically camera traps use motion and/or heat sensors to detect animals and trigger recordings. In underwater environments, these same techniques don't map as well, since temperature, visual noise (i.e., debris and water turbidity), and light levels don't work the same as on land.

In her work, she needed to be able to record underwater environments at various sites using multiple cameras (i.e., multiple cameras upstream and downstream) and compare this to results from eDNA samples taken at the same time. The fish she is studying are all small (i.e., minnows) and move very fast--nothing stays in frame for more than a second or two, sometimes less.

The footage ends up being periods of cloudy water with no fish, then suddenly a fish! then nothing again. It's difficult to manually find these fish in the collected video footage and so easy to miss them completely as they race past in the current.

"Finding the fish in these videos is going to take you forever," she was told. Challenge accepted! So we built ACTION together. Using the existing AI models with our custom pipeline, we are able to transform hours of video footage into short, separate video clips that contain all the fish. After this, the serious work of species analysis and identification is much easier.

The process of building the tool together with my daughter was a lot of fun. In addition to the internship, another of her current courses is Bioinformatics, where she's learning Unix, Bash scripting, and Python. This made it easy for us to choose our tech stack. As we iterated on the code, we were able to incorporate real-time feedback from the fieldwork and data she was collecting. The feedback loop was amazing, since we quickly understood what would and wouldn't work with actual data.

For example, unlike with terrestrial camera traps, it became clear that having sufficient amounts of buffer (e.g., video frames before and after a detection clip) was critical: while a Racoon might amble in front of your camera for 20 seconds, fish are there and then not. You need extra frames to be able to slowly scrub through when identifying a flash of colour (was that a Redside Dace?).

The real-world testing helped us come up with a useful set of options that can be tweaked by the user depending on the circumstances: what should the confidence threshold be for reporting a positive detection? how long should each clip be at minimum? where should the clips get stored? should the detection bounding boxes get displayed or saved along with the clips? Week by week we coded, refined our approach, and tested it on the next batch of videos. Real-world testing on live data turns out to be the fastest path to victory.

Eventually the fish detection was working really well and we wondered if we could extend it to work for terrestrial camera traps. Would the code work with MegaDetector, too? The answer turned out to be "yes," which was very rewarding. I've wanted to play with MegaDetector for years, but never had the reason to get started. Once the pipeline was built, swapping in a different AI model was doable (though not easy). We think that having this work for traditional camera traps, in addition to aquatic cameras, adds a lot to the potential usefulness of ACTION.

After we finished the project, we knew we had to share it. Imagine if this had existed when the internship started (it didn't, we looked!). We would love to see other people benefit from the same techniques we used. Also, the fact that YOLO-Fish and MegaDetector are both open source is an incredible gift to the scientific and software communities. We wanted to share, too.

Together we packaged things with pixi (thanks to the maintainers for accepting our fixes), learned how to do proper citations on GitHub, and used GitHub Releases to host our ONNX model files (protip: you can't use git-lfs for this). It was excellent real-world experience to see what's involved in shipping a cross-platform project.

For me, the most difficult problem technically was figuring out how to optimize the pipeline so it could run efficiently on our machines. We don't have access to fancy GPUs, and needed something that would work on our laptops. The answer was two-fold. First, converting the models to ONNX format and using ONNX Runtime instead of Yolo or PyTorch/TensorFlow as our model runtime. This was a game changer, and also let us throw away a whole host of dependencies. Finding the right magical incantations to achieve the conversions kept me up a few nights, but thanks to https://github.com/parlaynu/megadetector-v5-onnx and https://github.com/Tianxiaomo/pytorch-YOLOv4 we eventually got there. We also learned that we didn't need to analyze every frame in a video, and could use some tricks to make things work faster by doing less (as always, the fastest code is the code you don't run).

I learned a ton working on this project, and I'm thrilled to have been able to get involved in some of the amazing conservation biology research that I see my daughter doing all the time. At the start of the summer, I set myself the task of figuring out how to use AI and conservation technology together, and this was the best possible realization of that goal. It's very rewarding to have my skillset compliment my daughter's (neither of us could have done this project on our own), and I'm excited that I finally got a chance to work with my favourite scientist!

Do me a favour and please go give https://github.com/humphrem/action a star on GitHub, try it out, tell your friends, and let us know if you use it. Until then, we'll look forward to seeing your citations roll in on Google Scholar.