I've spent the past few months building programs with GPT-3. It's amazing and it's forcing me to rethink so much about how I write code or approach problems. I was first inspired to try using it after reading Simon's great post about how to get started. Since then I have been reading as much as I can, most of it Twitter oral-tradition that's being passed down in realtime. For example, the @goodside Twitter thread is particularly inspiring, and I've learned a lot from tricks I see Riley using.

A lot of people are excited about ChatGPT and its human back-and-forth response style. However, I've been more interested in seeing how far I can push GPT as a source of data. I've spent a lot of time trying to force it to give me JSON data in a particular format. It can be frustrating. My own work is largely based on consuming or creating web APIs of one kind or another, and I've been exploring how one might leverage GPT to imagine new data sources and backends.

Ethan Mollick has written that prompting GPT should feel less like polite conversation and more like a detailed command to a "non-sentient machine to generate the text we need." Our prompts need to be very specific. We need to "push" it where we want it to go. He writes:

Don’t ask it to write an essay about how human error causes catastrophes. The AI will come up with a boring and straightforward piece that does the minimum possible to satisfy your simple demand. Instead, remember you are the expert and the AI is a tool to help you write. You should push it in the direction you want. For example, provide clear bullet points to your argument: write an essay with the following points: -Humans are prone to error -Most errors are not that important -In complex systems, some errors are catastrophic -Catastrophes cannot be avoided

Rather than relying on serendipity, GPT works best when our prompts when they provide a well defined shape for the the language to fill.

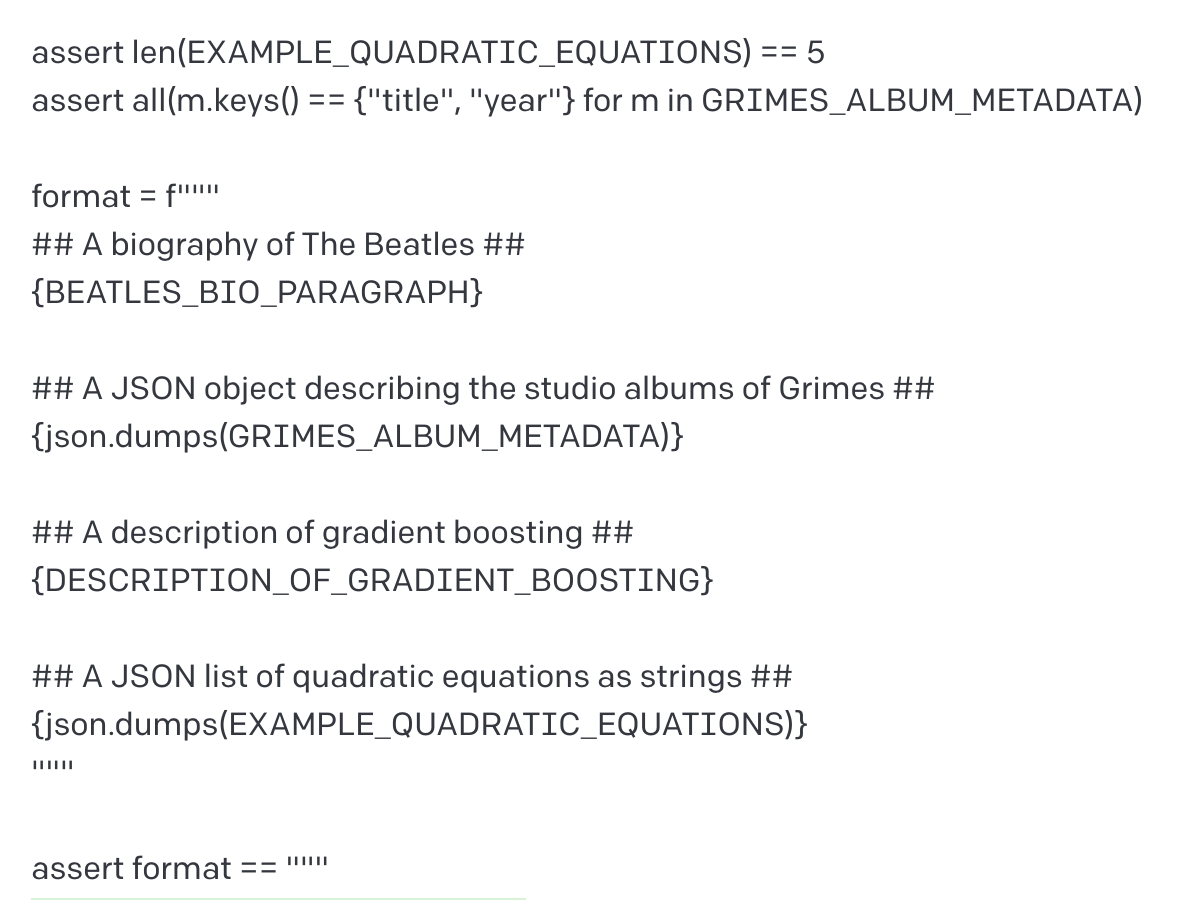

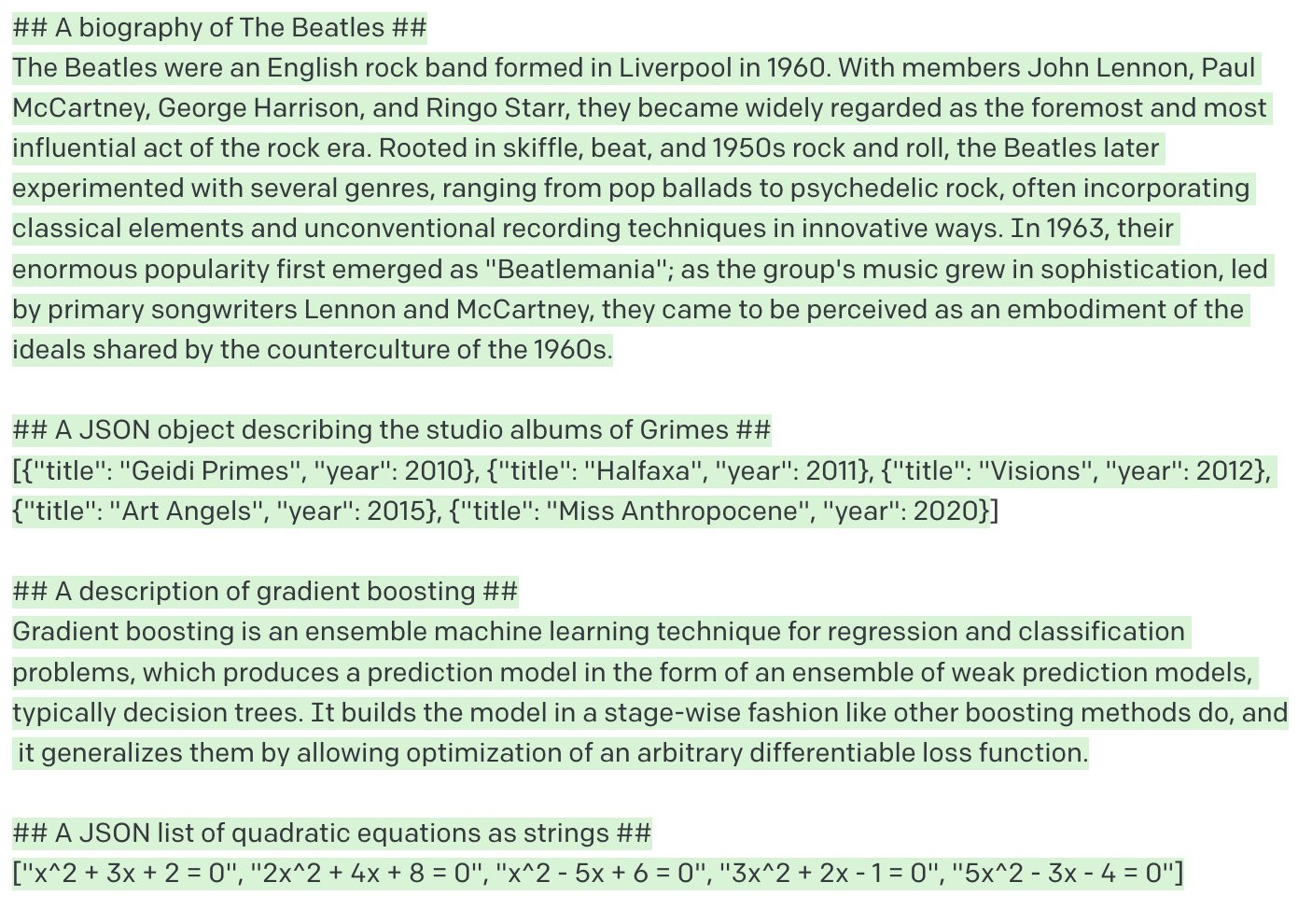

Two more recent ideas really got me thinking. Ian Bicking's Infinite AI Array planted the seed of an infinite data source for programming: what if your array didn't need to end? what if there was always more data? Added to that was this example from Riley, showing how to use Python assertions to first define the state and shape of your data, before asking GPT to produce it:

This blew my mind, and I haven't been able to close the tab! Think of the hundreds of hours I've spent writing code to validate data in programs, but here the tenets of these assertions become a world into which the data can be grown.

I wondered if I could go further. What if the entire prompt was a data schema? I decide to try working with JSON Schema. What's nice about it is that you can include all kinds of prompt-like info in the form of title and description, can specify things like whether or not an Array has duplicates, specify patterns (e.g., regex), can use recursion for data types, etc. It's such a rich, compact format for defining a shape that you want your data to take.

There might be better ways to do this, but here's my general pattern for prompting with JSON Schema:

from jsonschema import validate

s = {

"$schema": "https://json-schema.org/draft/2020-12/schema",

...

}

assert validate(instance=data, schema=s)

json.dumps(data) ==

Here, we define a schema, s, and tell GPT that our imaginary data adheres to this schema. We then ask it to fill-in the right-hand side of the json.dumps(data) expression, which will produce our desired JSON.

With this basic approach, you can now define the schema for any kind of data, and GPT will give it back to you. It's easy to experiment by taking some example schemas. Here's one:

from jsonschema import validate

s = {

"$schema": "https://json-schema.org/draft/2020-12/schema",

"title": "Longitude and Latitude Values",

"description": "A geographical coordinate.",

"required": [ "latitude", "longitude" ],

"type": "object",

"properties": {

"latitude": {

"type": "number",

"minimum": -90,

"maximum": 90

},

"longitude": {

"type": "number",

"minimum": -180,

"maximum": 180

}

}

}

assert validate(instance=data, schema=s)

json.dumps(data) ==Not surprisingly, GPT responds like so:

{

"latitude": 45.4,

"longitude": -75.7

}We could bake more description and form into the prompt via the schema, too. Let's say we want this to be an array of 5 capital cities in Canada. We also want to include the area codes in a specific format. With the schema, I can accomplish all of this without needing to add anything else to the prompt's text (i.e., I don't have to fight with natural language to describe my end goal--the schema includes both):

from jsonschema import validate

s = {

"$schema": "https://json-schema.org/draft/2020-12/schema",

"title": "Capital Cities in Canada",

"type": "array",

"minItems": 4,

"maxItems": 5,

"uniqueItems": true,

"items" : {

"required": [ "latitude", "longitude", "name", "codes" ],

"type": "object",

"properties": {

"name": { type: "string" },

"codes": {

"type": "array",

"description": "All area codes for the city",

"items": {

"type": "string",

"pattern": "\(\d{3}\)"

},

},

"latitude": {

"type": "number",

"minimum": -90,

"maximum": 90

},

"longitude": {

"type": "number",

"minimum": -180,

"maximum": 180

}

}

}

}

assert validate(instance=data, schema=s)

json.dumps(data) == Again, GPT is happy to give me exactly what I've asked for:

[

{

"name": "Ottawa",

"codes": ["(613)"],

"latitude": 45.4215,

"longitude": 75.6972

},

{

"name": "Victoria",

"codes": ["(250)"],

"latitude": 48.4284,

"longitude": -123.3656

},

{

"name": "Edmonton",

"codes": ["(780)", "(587)"],

"latitude": 53.5444,

"longitude": -113.4909

},

{

"name": "Winnipeg",

"codes": ["(204)"],

"latitude": 49.8951,

"longitude": -97.1384

},

{

"name": "Toronto",

"codes": ["(416)", "(647)", "(437)"],

"latitude": 43.7001,

"longitude": -79.4163

}

]The JSON Schema docs are full of examples of how you can use enums, structure complex types, etc. So far, it's been able to handle everything I've thrown at it.

Likely this approach isn't new, just new to me. But in case you want to use GPT for data generation, I wanted to share how this works. It's been really expressive and powerful for me.