It's been a month since I wrote about the work we're doing on ChatCraft.org. Since then we've been focused on a few things and I wanted to write about how they work.

First, Taras added support for OpenRouter, which was extended by Chigala Kingsley. Previously, we could only work with OpenAI models, but OpenRouter also gives us access to Google's PaLM, Anthropic's Claude, and Meta's Llama models. This is fantastic, because it pairs so nicely with our existing ability to work with different models in the same chat (i.e., send a prompt to any model, or retry a response with a different model). It's amazing being able to mix and match responses from so many LLMs across all these providers within the same tool. This is the real power of ChatCraft, which isn't beholden to any particular LLM or vendor.

Second, we've been working a lot on adding support for function calling and the ability to create tools. This is a feature that Taras and I have discussed at length for months, and it's been extremely difficult to nail down the UX. However, we've finally managed to ship an initial version.

OpenAI and ChatGPT have had the ability to use functions for a while. The concept is this:

- You define the interface for a function (name, description, and schema for the arguments). Imagine a function that can add two numbers, download a file and process it somehow, etc.

- You let the LLM know about the existence of the function and either tell it to call it, or let it decide if it needs to use it when you send your prompt (i.e., functions can be optional or required)

- If the LLM wants to call your function, it sends a special message in JSON format with the name and arguments it wants to pass to the function

- The LLM never calls the function–you have to do this on your own. When you're done executing the function on its behalf, you send the LLM the result and it continues processing

ChatGPT lets you do this, too, but you're not in control of the process or functions. We wanted to give users the ability to easily define and run their own custom functions, and to do so directly in their browser.

ChatCraft defines a function as an ES Module that includes the function and its metadata:

- The name of the function

- The description of the function

- The schema of the parameters (simplified JSON schema)

- A default function export, which is the function itself

Here's a simple example, which can calculate the sum of a list of numbers. LLMs aren't great at doing math, so a function that can do the calculation is really useful. A function can do whatever you want, as long as it's doable in the context of browser-based JavaScript (or via CORS and API calls). You return a Promise with your result and we send it back to the LLM. If you want your data to get formatted in the UI you can wrap it in a Markdown codeblock (e.g., 3 backticks with a language).

These function modules can be written and hosted inside ChatCraft itself: https://chatcraft.org/f/new will create a new one. Or you can host them (as plain text) on the web somewhere (e.g., as a Gist). In both cases we load them dynamically and run them on demand for the LLM.

You tell the LLM about these functions using the syntax @fn: sum or @fn-url: https://gist.github.com/humphd/647bbaddc3099c783b9bb1908f25b64e, where @fn refers to the name of a function stored in your local ChatCraft database, and @fn-url points to a remotely stored function.

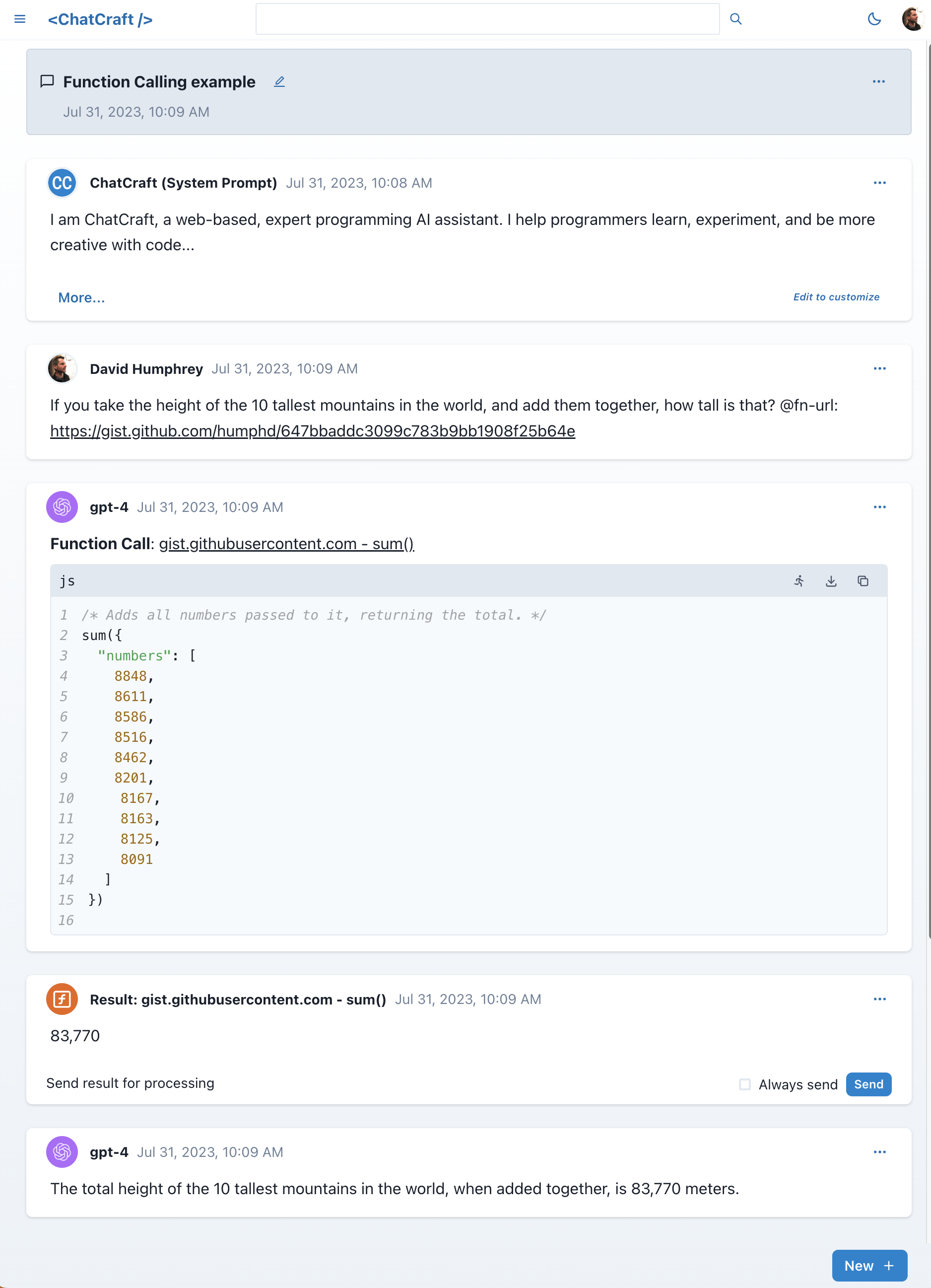

I shared a chat earlier today to demonstrate how this works, and you can see it here:

https://chatcraft.org/c/humphd/LtEkj_J3p66MkDadwzwrH

Here I use my remote function via the @fn-url syntax, and GPT-4 incorporates it into its answer. We show all the steps of the process in the UI: the function call, the result, and what the LLM does with it. In theory you could also collapse this all into one message, but we think "showing your work" is a more powerful approach.

That said, we're just getting started with the UX for this, and every time we've tested things we've realized new ways to make it better or bugs that need to be fixed. However, it actually works and we're really excited about it! It's very easy to experiment and get things running fast.

The real power of this feature is going to come from combining it with custom system prompts, where we give the LLM an initial context and set of functions it can use to achieve specific goals. Getting both of these features in place over the summer has opened up a very interesting space that we're excited to start exploring.

If you want to try this today for yourself, you're welcome to do so (it's live on ChatCraft.org). Let us know what you build, what breaks, and what you think we should do next.